LINKEDIN | 👨💻 Data Cleaning & Automation

Business Problem

In this project, we, as data analysts, are focused on analyzing the job market for roles related to data analytics in different countries. Our data source comes from web scraping that we have obtained from LinkedIn, which contains information about job listings in this field. Our primary objective is to validate the quality of the data and subsequently extract key insights about the job market in the field of data analytics.

This project will attempt to answer the following questions:

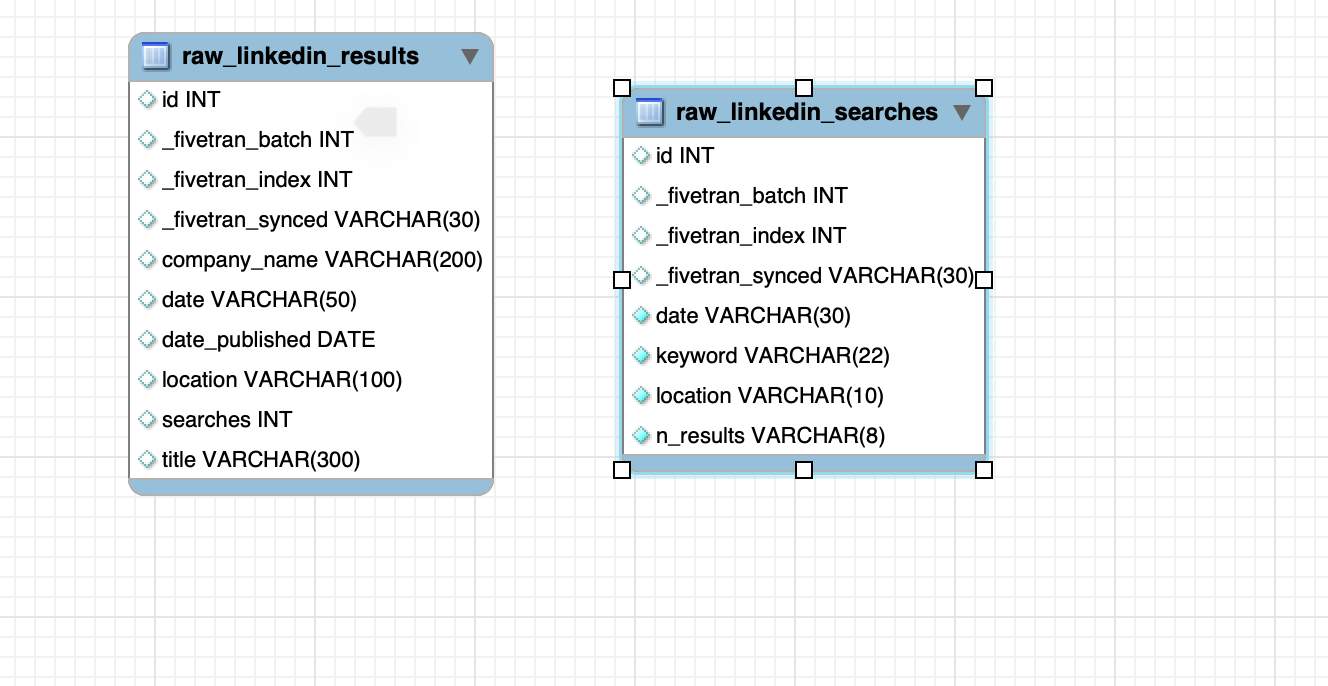

Data-Set Diagram

The data is originally in CSV format, and the data source comes from a web scraping download obtained from LinkedIn, which contains information about job listings in data analysis.

Analysis

Part I - Create "linkedin_data" Table + Stored Procedure (SP)

1. Load the "linkedin_data" database.

2. Create the "linkedin_ofertas" table with the following fields:

fecha_actualizacion (datetime) nombre_empresa (varchar(200)) fecha_busqueda_oferta_linkedin (datetime) fecha_publicacion_oferta (date) pais_oferta (varchar(200)) search_id_oferta (int) titulo_oferta (varchar(200)) Modify the date restrictions by executing the following statement: SET @@SESSION.sql_mode='ALLOW_INVALID_DATES';

3. Define the query that will clean and format the data as desired.

4. Create a stored procedure with the cleaning query to insert data into the table.

5. Create an event that executes the stored procedure daily.

Part II - Create "linkedin_busquedas" Table + Stored Procedure (SP)

1. Create the "linkedin_busquedas" table with the following fields:

id_busqueda (INT PRIMARY KEY) fecha_busqueda (datetime) fecha_actualizacion (datetime) keyword_busqueda (varchar(200)) pais_busqueda (varchar(200)) n_resultados_busqueda (int) Modify the date restrictions by executing the following statement: SET @@SESSION.sql_mode='ALLOW_INVALID_DATES';

2. Define the query that will leave the data as desired (clean it).

3. Create a stored procedure with the cleaning query to insert data into the table.

4. Create an event that executes the stored procedure daily.

Exploratory Analysis - Data Validation

In the initial phase, we conduct an exploratory analysis of our dataset to better understand it. Some queries that we execute include:

Data Cleansing & Automation

Reporting

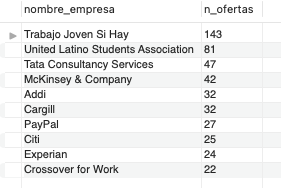

1. Which companies have the highest number of job listings?

2. How many job listings do we have in the table by location?

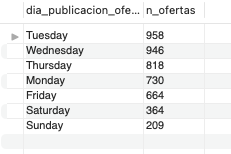

3. How many job listings do we have per day of publication?

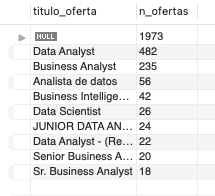

4. What are the top 10 job title roles used for posting job listings?

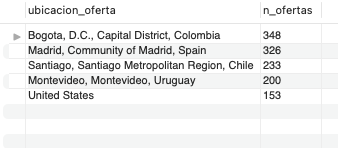

5. What are the top 5 locations with the highest number of job listings?

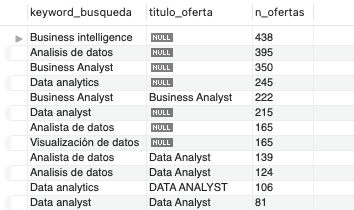

6. How many job listings combine keywords with the job title? Can you provide the count by both fields?

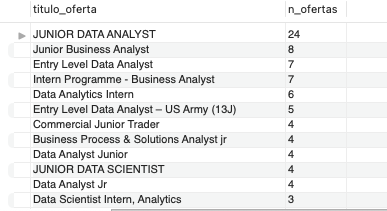

7. How many job positions are labeled as "junior"? Can you provide the count by job title?

8. Can you now provide the count of job listings with the title "junior," but broken down by country?

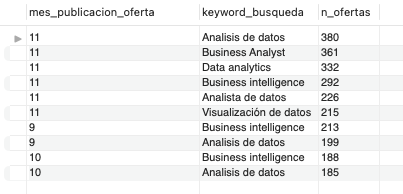

9. Can we determine the number of job listings published per month and keyword? Which months are the most prominent, and with which keywords?

Conclusions

Summary of Results:

Based on the findings, we recommend data analytics professionals consider locations with high demand, common role titles, and relevant keywords when seeking employment or specializing in this field.

Companies can use this information to guide their hiring strategies and better understand competition in hiring data analytics professionals.

We suggest improving the quality of web scraping data and exploring the possibility of incorporating additional data to enrich the analysis.

Challenges related to data quality and result interpretation were encountered. Lessons were learned about the importance of data cleaning and the need to fully understand the data context.

In future projects, best practices in data cleaning can be implemented, and advanced data mining techniques can be considered for deeper analysis.